By: W. Barry Nixon, COO, PreemploymentDirectory.com

In the age of the ‘Great Resignation’ and ‘remote working’ recruiting has become more challenging than at any time in modern history. Employers are struggling to keep good employees and to replace those that leave. It is an unprecedented time in recruiting and talent acquisition.

On top of this challenge, we have the rapid deployment of remote work mostly driven by the COVID-19 pandemic although it is often overlooked that employers were able to switch to this new way of work literally overnight because the technology was already available to make this happen. The availability of the technology enabled this transition, however, as is the case with all new directions there are always new challenges to face. As a Chief Scientist at a Computer Research lab once quipped to me, “we have solved the problem, now we must fight the solution.”

With all the good that technology has enabled there is an ugly downside.

In the article, ‘Desperate employers and remote hiring: A recipe for candidate fraud?’ Lindsey Zuloaga, chief data scientist at HireVue, a hiring technology provider, said, “Technology and remote work have changed the opportunities available for cheating in the hiring funnel.” “Like any type of fraud, the cheaters continue to invent new techniques, and the victims of fraud must always keep up,” she adds.

According to HireVue’s 2022 Global Trends Report, “Three out of four respondents are now using virtual interviews to some degree, with 20% relying solely on them for interview needs. Additionally, nearly half of respondents (45%) are using some form of automation in their hiring process, and 20% plan to implement it in the next 6-12 months.”

This heightened focus on virtual interviews which is greatly driven by the emerging ‘work remotely culture’ is putting a lot of pressure on employers to manage the risk associated with deploying this part of the hiring process. Along with managing the usual list of risk that accompany the hiring process, e.g., exaggerated work experience and education credentials, inflated resumes, fakes degrees, work experience and references, etc. employers now have a new emerging risk to deal with – deepfakes.

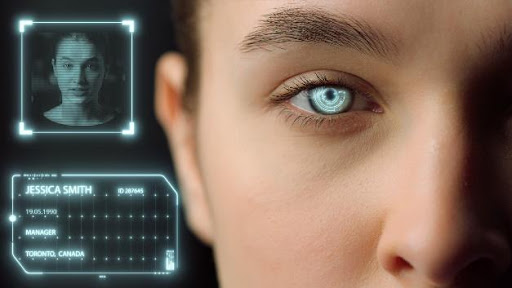

What is a Deepfake?

Deepfake technology uses artificial intelligence to create convincing, synthetic media that can include a video, an image, or recording convincingly altered and manipulated to misrepresent someone as doing or saying something that was not actually done or said. Through this technology, fake job applicants can use an overlay of a different individual’s face to conduct the video interview using a different identity. While the notion may sound ridiculous, deepfakes can be alarmingly realistic.

Some of the Risks Posed by Deepfakes

Protocol.com shared a real situation that recently occurred where a new hire and the interview candidate were not the same person. One of their reader’s husbands, who works in IT at a “midsized private company,” realized that his new hire “John” is not the same candidate he interviewed. He has different hair and glasses, different life details and can’t answer basic questions related to the job. “John” ends up quitting before HR can fully interrogate him. After that, he’s unreachable. “1

I am sure many of you are thinking that is preposterous since it you may not have experienced this situation, however, Nick Shah, president of IT staffing company Peterson Technology Partners, said “this has been happening in the IT industry for quite some time. Having someone feed you answers in a video interview is common.” He added, “There’s a terminology in the industry that people call ‘proxy.’ It’s your face on the camera, but somebody else is speaking on your behalf. ”2

According to Shah, “There are actually companies out there that advertise that they will help you with your interviews and charge you $500 to $700 per interview and that instances of fake candidates have ramped up during the pandemic. ”3

According to a warning issued by the FBI’s Internet Crime Complaint Center (IC3) unit scam artists are exploring avenues to exploit the remote-work revolution. The report shared that complaints are rolling in about a scheme in which fraudulent candidates apply for remote-work positions and use deepfake technology to hide their identity during the video interview process. While the reported attempts so far have been foiled, the FBI said its presumption is that hackers are hoping to secure the position, gain access to company logins and steal sensitive customer and client information.

Fraudsters have been targeting mainly tech-heavy jobs. These include information technology, engineering, database management and other roles that would likely require access to private information.

Brian Kropp, chief of HR Research at Gartner, said he’s heard all employees working remotely for two companies simultaneously, blatant resume lies, people taking technical assessment tests for each other.

The FBI also reported the use of stolen personal identifying information to apply for remote positions.

What Can You Do to Protect Your Organization against Fake Candidates?

- One very obvious solution is to emphasize on the front end that your organizations’ values high integrity and let applicants know that you have zero tolerance for dishonesty, disrespectful treatment and misrepresenting information.

- Require that video interviews occur in a well-lit room, on a computer and without headphones.

- Do not allow candidates to video interview without their camera on.

- Some basic tips for spotting deepfake videos include:

- paying close attention to a candidate’s mouth and audio to make sure they sync up.

- watch to see if the actions and lip movement of the person being interviewed on-camera do not completely coordinate with the audio of the person speaking. For example, actions such as coughing, sneezing, or other auditory actions are not aligned with what is presented visually.

- observing frequency or lack of blinking.

- symmetry in eye color or earrings, can also reveal a deepfake.

- look at eye movement to see if they’re glancing at anyone else in the room.

- look for whether glasses have a glare.

- Ask a candidate to share their screen. This will often weed the fakes out.

- Use a blended approach that combines video interviews with a follow-up interview.

- Consider having the applicant go to a local ‘interview center’ that is set up for virtual interviews and has staff to verify that the person is alone.

- Use strong background checks and onboarding procedures.

- Blinking detection, image forensics and occlusion detection can be used to identify deepfakes, and AI algorithms can analyze artefacts to reveal digital manipulation. Ann-Kathrin Freiberg of BioID says “If a machine makes something, then a machine would normally be able to detect the traces.”4

- Companies like Microsoft have also come out with software that can detect deepfakes and the MIT Media Lab created a website to help people practice deepfake identification. It also put out a checklist of video elements to observe closely.

- Employers should invest in training personnel involved in the hiring process about how to spot deepfake technology.

- Use strong identity verification tools and processes. Hint, verifying their social security number is not a sufficient means of identifying a person.

- The US Department of State, the US Department of the Treasury, and the Federal Bureau of Investigation (FBI) in May warned US organizations not to inadvertently hire North Korean IT workers. These contractors weren’t typically engaged directly in hacking, but were using their access as sub-contracted developers within US and European firms to enable the nation’s hacking activities, the agencies warned.

- Finally, in the HR Dive article, ‘The FBI says fake job applicants are on the prowl. How can HR protect itself?’ FBI Supervisory Special Agent Brian Blauser said “employers can side-step the threat of an online fraudster by requiring at least one in-person interview. If a candidate is in the same city or area, requiring an in-person interview may be an easy lift. If the candidate is from outside the region, it may be worth discussing the budgetary potential of flying them in. Even mentioning an in-person interview may scare off a potential fraudster.

Conclusion

While the issue of deepfake job applicants is only just emerging, HR pros must be on the alert with the increasing use of video and virtual interviewing. They must be very diligent in deploying identification verification tools and significantly increase the knowledge of their hiring staff about how to spot deepfakes. Now is the time to be proactive with preventative measures. Don’t wait until you have a major breach or incident to put in the necessary measures for dealing with deepfakes.

Deepfakes pose a legitimate new threat to the hiring process. While keeping in mind that most candidates act in good faith and are simply trying to do their best to get the job make no mistake about it that there are others with malicious intent. Cheating existed in many forms long before the rise of remote hiring, but employers must nevertheless be aware and vigilant in taking actions to thwart these new methods of deceit and adopt innovative solutions to meet the challenge.

Bibliography:

- Burton, Amber, reporter, What happens when the new hire and the interview candidate are not the same person,; February 3, 2022. https://www.protocol.com/newsletters/protocol-workplace/spotting-fake-interviews?rebelltitem=1#rebelltitem1.

- ibidem

- ibidem

- Burt, Chris, BioID shares encouraging research on deepfakes and biometric liveness detection with EAB; Feb 25, 2022. https://www.biometricupdate.com/202202/bioid-shares-sobering-research-on-deepfakes-and-biometric-liveness-detection-with-eab.